Statistical process control was pioneered by Dr. Walter A. Shewhart in the early 1920s. Dr. W. Edwards Deming later applied SPC methods in the United States during World War II, thereby successfully improving quality in the manufacture of munitions and other strategically important products. Dr. Deming was also instrumental in introducing SPC methods to Japanese industry after the war had ended.

Dr. Shewhart created the basis for the control chart and the concept of a state of statistical control by carefully designed experiments. While Dr. Shewhart drew from pure mathematical statistical theories, he understood that data from physical processes seldom produces a "normal distribution curve" (a Gaussian distribution, also commonly referred to as a "bell curve"). He discovered that observed variation in manufacturing data did not always behave the same way as data in nature (for example, Brownian motion of particles). Dr. Shewhart concluded that while every process displays variation, some processes display controlled variation that is natural to the process (common causes of variation), while others display uncontrolled variation that is not present in the process causal system at all times (special causes of variation).

In 1989, the Software Engineering Institute introduced the notion that SPC can be usefully applied to non-manufacturing processes, such as software engineering processes, in the Capability Maturity Model (CMM). This idea exists today within the Level 4 and Level 5 practices of the Capability Maturity Model Integrated (CMMI). This notion that SPC is a useful tool when applied to non-repetitive, knowledge-intensive processes such as engineering processes has encountered much skepticism, and remains controversial today.

The crucial difference between Shewhart’s work and the purpose of SPC that emerged is that SPC typically involved mathematical distortion and tampering, is that his developments were in context, and with the purpose, of process improvement, as opposed to mere process monitoring (i.e. they could be described as helping to get the process into that “satisfactory state” which one might then be content to monitor.) W. Edwards Deming and Raymond T. Birge took attention to Dr. Shewhart’s work. Deming and Birge were intrigued by the issue of measurement error in science. Upon reading Shewhart’s insights, they wrote to wholly recast their approach in terms that Shewhart advocated. Note, however, that a true adherent to Deming’s principles would probably never reach that situation, following instead the philosophy and aim of continuous improvement.

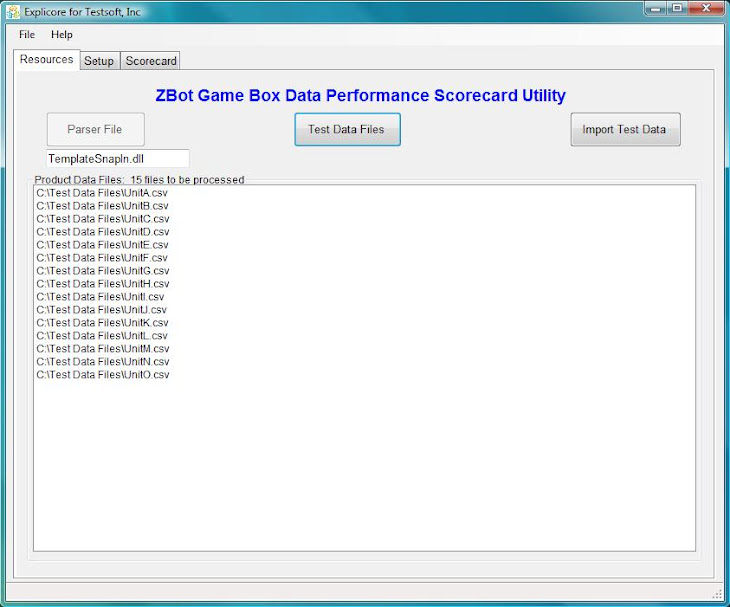

Statistical process control (SPC) is an effective method of monitoring a process through the use of control charts. Control charts enable the use of objective criteria for distinguishing background variation from events of significance based on statistical techniques. Much of its power lies in the ability to monitor both process center and its variation about that center, by collecting data from samples at various points within the process. Variation in the process that may affect the quality of the end product or service can be detected and improved or corrected, thus reducing waste as well as the likelihood that problems will be passed onto the customer. With its emphasis on early detection and prevention of problems, SPC has a distinct advantage over quality methods, such as inspection, that apply resources to detecting and correcting problems in the end product or service. In conjunction with SPC, TestSoft’s automated lean six sigma product, Explicore, enables companies to test the robustness of their manufacturing and design processes quickly. Explicore takes all parameters related to a product, process, or system and within minutes the product identifies the parameters in need of correction or improvement. The output of Explicore is a statistically based report which identifies the key process indicators so a company can quickly identify where to put their resources to correct or improve problem areas.

In addition to reducing waste, SPC and Explicore can lead to a reduction in the time required to produce the product or service from end-to-end. This is partially due to a diminished likelihood that the final product will have to be reworked, but it may also result from using Explicore and SPC data to identify bottlenecks, wait times, and other sources of delays within the process. Process cycle-time reductions coupled with improvements in yield have made Explicore and SPC a set of valuable tools from both a cost reduction and a customer satisfaction standpoint. Explicore is able to harvest a vast amount of data automatically, effectively and consistently evaluates the product, process, and system health, and saves precious time and money – reducing the total cost of product ownership. That said, when we speak about SPC, we are also speaking about using Explicore to facilitate harvesting the data at an accelerated rate.

GeneralThe following description relates to manufacturing rather than to the service industry, although the principles of SPC in conjunction with Explicore can be successfully applied to either. For a description and example of how SPC applies to a service environment, refer to Lon Roberts’ book, (SPC for Right-Brain Thinkers: Process Control for Non-Statisticians – 2005). SPC has also been successfully applied to detecting changes in organizational behavior with Social Network Change Detection introduced by McCulloh (2007). Paul Selden in his book (Sales Process Engineering: A Personal Workshop – 1997) describes how to use SPC in the fields of sales, marketing, and customer service, using Deming's famous Red Bead Experiment as an easy to follow demonstration. In conjunction with SPC, Explicore is able to be used in a number of different settings – simply submit the data to Explicore and the product will indicate the areas in need of improvement or correction.

In mass-manufacturing, the quality of the finished article was traditionally achieved through post-manufacturing inspection of the product; accepting or rejecting each article (or samples from a production lot) based on how well it met its design specifications. In contrast, Statistical Process Control uses statistical tools to observe the performance of the production process in order to predict significant deviations that may later result in rejected product.

Two kinds of variation occur in all manufacturing processes – Common Cause and Special Cause: both these types of process variation cause subsequent variation in the final product. The first is known as natural or common cause variation and may be variation in temperature, properties of raw materials, strength of an electrical current etc. This variation is small, the observed values generally being quite close to the average value. The pattern of variation will be similar to those found in nature, and the distribution forms the bell-shaped normal distribution curve. The second kind of variation is known as special cause variation, and happens less frequently than the first.

For example, a breakfast cereal packaging line may be designed to fill each cereal box with 500 grams of product, but some boxes will have slightly more than 500 grams, and some will have slightly less, in accordance with a distribution of net weights. If the production process, its inputs, or its environment changes (for example, the machines doing the manufacture begin to wear) this distribution can change. For example, as its cams and pulleys wear out, the cereal filling machine may start putting more cereal into each box than specified. If this change is allowed to continue unchecked, more and more product will be produced that fall outside the tolerances of the manufacturer or consumer, resulting in waste. While in this case, the waste is in the form of "free" product for the consumer, typically waste consists of rework or scrap.

By observing at the right time what happened in the process that led to a change, the quality engineer or any member of the team responsible for the production line can troubleshoot the root cause of the variation that has crept in to the process and correct the problem.

SPC indicates when an action should be taken in a process, but it also indicates when NO action should be taken. An example is a person who would like to maintain a constant body weight and takes weight measurements weekly. A person who does not understand SPC concepts might start dieting every time his or her weight increased, or eat more every time his or her weight decreased. This type of action could be harmful and possibly generate even more variation in body weight. SPC would account for normal weight variation and better indicate when the person is in fact gaining or losing weight.

How to use SPCInitially, one starts with an amount of data from a manufacturing process with a specific metric, i.e. mass, length, surface energy...of a widget. One example may be a manufacturing process of a nanoparticle type and two parameters are key to the process; particle mean-diameter and surface area. So, with the existing data one would calculate the sample mean and sample standard deviation. The upper control limits of the process would be set to mean plus three standard deviations and the lower control limit would be set to mean minus three standard deviations. The action taken depends on statistic and where each run lands on the SPC chart in order to control but not tamper with the process. The criticalness of the process can be defined by the Westinghouse rules used. The only way to reduce natural variation is through improvement to the product, process technology, and/or system.

Explanation and Illustration:

What do “in control” and “out of control” mean?

Suppose that we are recording, regularly over time, some measurements from a process. The measurements might be lengths of steel rods after a cutting operation, or the lengths of time to service some machine, or your weight as measured on the bathroom scales each morning, or the percentage of defective (or non-conforming) items in batches from a supplier, or measurements of Intelligence Quotient, or times between sending out invoices and receiving the payment etc.

A series of line graphs or histograms can be drawn to represent the data as a statistical distribution. It is a picture of the behavior of the variation in the measurement that is being recorded. If a process is deemed as “stable” then the concept is that it is in statistical control. The point is that, if an outside influence impacts upon the process, (e.g., a machine setting is altered or you go on a diet etc.) then, in effect, the data are of course no longer all coming from the same source. It therefore follows that no single distribution could possibly serve to represent them. If the distribution changes unpredictably over time, then the process is said to be out of control. As a scientist, Shewhart knew that there is always variation in anything that can be measured. The variation may be large, or it may be imperceptibly small, or it may be between these two extremes; but it is always there.

What inspired Shewhart’s development of the statistical control of processes was his observation that the variability which he saw in manufacturing processes often differed in behavior from that which he saw in so-called “natural” processes – by which he seems to have meant such phenomena as molecular motions.

Wheeler and Chambers combine and summarize these two important aspects as follows:

"While every process displays variation, some processes display controlled variation, while others display uncontrolled variation."

In particular, Shewhart often found controlled (stable variation in natural processes and uncontrolled (unstable variation in manufacturing processes. The difference is clear. In the former case, we know what to expect in terms of variability; in the latter we do not. We may predict the future, with some chance of success, in the former case; we cannot do so in the latter.

Why is "in control" and "out of control" important?

Shewhart gave us a technical tool to help identify the two types of variation: the control chart.

What is important is the understanding of why correct identification of the two types of variation is so vital. There are at least three prime reasons.

First, when there are irregular large deviations in output because of unexplained special causes, it is impossible to evaluate the effects of changes in design, training, purchasing policy etc. which might be made to the system by management. The capability of a process is unknown, whilst the process is out of statistical control.

Second, when special causes have been eliminated, so only common causes remain, improvement has to depend upon someone’s action. For such variation is due to the way that the processes and systems have been designed and built – and only those responsible have authority and responsibility to work on systems and processes. As Myron Tribus, Director of the American Quality and Productivity Institute, has often said: “The people work in a system – the job of the manager is to work on the system and to improve it, continuously, with their help.”

Third, something of great importance, but which has to be unknown to managers who do not have this understanding of variation, is that by (in effect) misinterpreting either type of cause as the other, and acting accordingly, they not only fail to improve matters – they literally make things worse.

These implications, and consequently the whole concept of the statistical control of processes, had a profound and lasting impact on Dr. Deming. Many aspects of his management philosophy emanate from considerations based on just these notions.

Why SPC?The fact is that when a process is within statistical control, its output is indiscernible from random variation: the kind of variation which one gets from tossing coins, throwing dice, or shuffling cards. Whether or not the process is in control, the numbers will go up, the numbers will go down; indeed, occasionally we shall get a number that is the highest or the lowest for some time. Of course we shall: how could it be otherwise? The question is - do these individual occurrences mean anything important? When the process is out of control, the answer will sometimes be yes. When the process is in control, the answer is no.

So the main response to the question Why SPC? is this: it guides us to the type of action that is appropriate for trying to improve the functioning of a process. Should we react to individual results from the process (which is only sensible, if such a result is signaled by a control chart as being due to a special cause) or should we be going for change to the process itself, guided by cumulated evidence from its output (which is only sensible if the process is in control)?

Process improvement needs to be carried out in three chronological phases:

- Phase 1: Stabilization of the process by the identification and elimination of special causes:

- Phase 2: Active improvement efforts on the process itself, i.e. tackling common causes;

- Phase 3: Monitoring the process to ensure the improvements are maintained, and incorporating additional improvements as the opportunity arises.

Control charts have an important part to play in each of these Phases. Points beyond control limits (plus other agreed signals) indicate when special causes should be searched for. The control chart is therefore the prime diagnostic tool in Phase 1. All sorts of statistical tools can aid Phase 2, including Pareto Analysis, Ishikawa Diagrams, flow-charts of various kinds, etc., and recalculated control limits will indicate what kind of success (particularly in terms of reduced variation) has been achieved. The control chart will also, as always, show when any further special causes should be attended to. Advocates of the Numerical Evaluation of Metrics (NEM) approach will consider themselves familiar with the use of the control chart in Phase 3. However, it is strongly recommended that they consider the use of NEM used in conjunction with Explicore in order to see how much more can be done even in this Phase than is normal practice.

An Introduction to Numerical Evaluation of MetricsNumerical evaluation of metrics indicates that one must manage the input (or cause) instead of managing the output (results). A transfer function describes the relationship between lower level requirements and higher level requirements Y=f(X). The transfer function can be developed to define the relationship of elements and help control a process. By managing the inputs, we will be able to identify and improve multiple processes that contribute something to the variation of the output which is necessary for real improvement. Also, we will need to manage the relationship of the processes to one another. Another thought is that it becomes the process variation versus design tolerances where the center of the process is independent of the design center and the upper specification limits and lower specification limits. Before we discuss NEM further, let’s understand common cause and special cause variation. Common Cause Variation existis within the Upper and Lower Control Limits and Special Cause Variation exists outside the Upper and Lower Control Limits as see below:

Variation exists in everything. However...

Variation exists in everything. However...

"A fault in the interpretation of observations, seen everywhere, is to suppose that every event (defect, mistake, accident) is attributable to someone (usually the nearest at hand), or is related to some special event. The fact is that most troubles with service and production lie in the system. Sometimes the fault is indeed local, attributable to someone on the job or not on the job when he should be. We speak of faults of the system as common causes of trouble, and faults from fleeting events as special causes." -

W. Edwards DemingWe are able to go beyond SPC by using the numerical evaluation of metrics (NEM).

- Measurements will display variation.

- There is a model (common cause – special cause model) that differentiates this variation into either assignable variation, are due to “special” sources, or common cause variation. Control charts must be used to differentiate the variation.

- This is the Shewhart / Deming Model

- Remember, a metric that is in control implies a stable, predictable amount of variation (of common cause variation). This, however, does not mean a “good” or desirable amount of variation – reference the Western Electric Rules.

- A metric that is out-of-control implies an unstable, unpredictable amount of variation. It is subject to both common cause and special cause of variation.

Rational sub-grouping is one of the key items that make control charts work. Rational sub-grouping means that we have some kind of “rationale” for how we sub-grouped the data. As we look at Control Charts we need to ask ourselves four questions:

- What x’s and noise are changing within the subgroup

- What x’s and noise are not changing within the subgroup

- What x’s and noise are changing between the subgroups

- What x’s and noise are not changing between the subgroups

Our goal is to improve the processes – SPC/NEM helps by recognizing the extent of variation that now exists so we do not overreact to random variation. To accomplish this we need to study the process to identify sources of variation and then act to eliminate or reduce those sources of variation. We expand on these ideas to report the state of control, monitor for maintenance, determine the magnitude of effects due to changes on the process, and discover sources of variation.

Some typical numeric indicators are: Labor, material, and budget variances; scrap; ratios – inventory levels and turns, and asset turnover; gross margin; and schedules. Any measure will fluctuate over time regardless of the appropriateness of the numerical indicator. These numbers are a result of the many activities and decisions that are frequently outside the range of manager’s responsibility.

Some metrics including organizational metrics (the output “Ys”) are influenced by multiple tasks, functional areas, and processes. If we believe in managing the ‘causes’ (the input“Xs”) instead of the ‘results’ (“Ys in the transfer function Y = f(x)”) the identification and improvement of the multiple processes that each contribute something to the variation in the “Ys” is necessary for improvement. The management of the relationship of these processes to each other is also required. Otherwise, attempting to directly manage these “Ys” can lead to dysfunctional portions of the product, process, or system.

Currently, the judgments as to the magnitude of the variation in these numerical indicators are based on: comparison to a forecast, goal, or expectation; comparison to a result of the same kind in a previous time period; and intuition and experience. Judgments made in this context ignore: the time series that produced the number; the cross-functional nature of the sources of variation; the appropriateness of that measure for improvement purposes: and the complete set of ‘Xs” that must be managed to improve product parameters.

A review of the preliminary guidelines for NEM:

- Control limits are calculated from a time series of the metric

- Different formulas are available – depending on the type of data.

Control limits should NOT be recalculated each time data is collected:

- The observed metrics (and control limits) are a function of the sampling and sub-grouping plan

- Variation due to ‘assignable cause’ is often the easiest variation to reduce

- The most commonly monitored metrics are the outputs (“Ys”)

- These metrics may be a function of one process or many processes

- Control limits are not related to standards nor are they specifications. Control limits are a measure of what the process does or has done. It is the present / past tense, not the future – where we want the process to be

- Control limits identify the extent of variation that now exists so that we do not overreact to ‘random’ variation.

Diagnosing the causes of variation

Charts that are ‘In-control’ tell you that variation is within the subgroup and charts that are ‘Out-of-control’ tells you that variation is between subgroups. We can use control charts and the related control limits to view and evaluate some of the potential causes of variation within a process. This view of the process can be used to estimate the improvement opportunity if the identified cause or causes of variation were removed or reduced. The technique that we use is called rational sub-grouping. Rational sub-grouping means that we have some kind of “rationale” for how we sub-grouped the data. In other words, we are conscious of the question we are asking with our sub-grouping strategy and will take appropriate action based on the results the control charts provide. This is where Explicore will be able to help identify where the problems, if any, exist. Explicore captures like data, characterizes that data, and performs a preliminary analysis of the data. This quickly and consistently identifies the parameters that require attention.

In rational sub-grouping, the total variation of any system is composed of multiple causes: setup procedures; the Product itself; process conditions; or maintenance processes. By sub-grouping data such that samples from each of these separate conditions are placed in separate sub-groups we can explore the nature of variation of the system or equipment. The goal is to establish a subgroup (or sampling strategy) small enough to exclude systematic non-random influences. The intended result is to generate data exhibiting only common cause variation within groups of items and special cause (if it exists) variation between groups.

Numerical Evaluation of Metrics is being usefully applied to non-manufacturing processes including the Capability Maturity Model (CMM). NEM is a useful tool when applied to non-repetitive, knowledge-intensive processes such as engineering processes. Remember, if we can control the input to a product, process, service, system, and the like, then the output should remain in control.

The overall goal we are interested in is the average of the process, the “long-term” variation and the “short-term” variation. To understand the variation as fast as possible is important to the success of a company. Essentially, how fast we get to the problems, improve or correct problems and stabilize the product, process, system, etc. leads to improved performance as a company and increases its financial health. This is where TestSoft’s automated product, Explicore, is able to help – Explicore consistently identifies the key process indicators so a company can quickly identify where to put valuable resources to correct or improve problem areas effectively and efficiently that ultimately reduces the total cost of product ownership.